In the digital age, the rapid dissemination of information, accurate or otherwise, can have profound implications for society, politics, and public trust. A recent case involving a satirical LinkedIn post about Ethiopia’s economic policy exemplifies how misinformation, even when intended as humor or satire, can quickly evolve into a serious challenge to democratic discourse. This incident, which involved a fake recommendation from the International Monetary Fund (IMF), reveals critical vulnerabilities in Ethiopia’s information ecosystem and offers broader insights into the mechanisms through which disinformation spreads during sensitive political moments, such as elections.

The Viral Journey of a Satirical Post

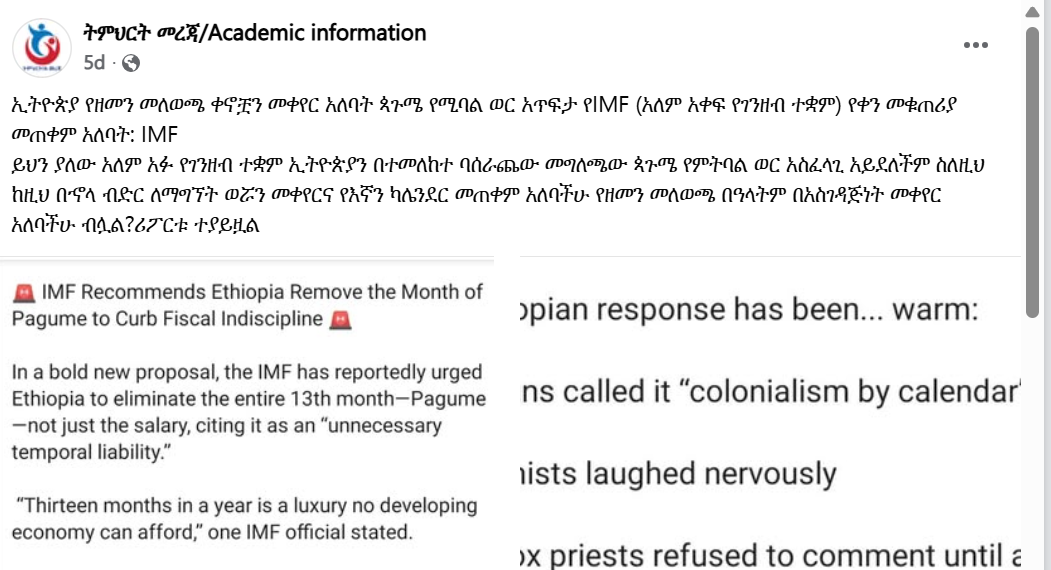

On July 23, 2025, a satirical LinkedIn post humorously declared, “IMF Recommends Ethiopia Remove the Month of Pagume to Curb Fiscal Indiscipline.” The post, crafted to mimic a legitimate news report, humorously suggested that the Ethiopian government should eliminate the 13th month of its traditional calendar, Pagume, to address macroeconomic concerns. The post’s tone was tinged with irony, criticizing perceived overreach in economic policy, with a punchline about “thirteen months in a year being a luxury no developing economy can afford.”

While some LinkedIn users recognized the satire, the post was widely misinterpreted as genuine news on platforms like TikTok, Facebook, and YouTube. Pagume, the 13th month in the Ethiopian calendar, with its 5 days (6 days in leap years), became the target of the fabricated IMF recommendation.

Context: Economic Challenges & IMF Reports

This post occurred shortly after the International Monetary Fund (IMF) released a July 15, 2025, report on Ethiopia’s economic reform program, highlighting progress alongside risks such as declining foreign aid and fragile security, and news outlets were widely reporting about different recommendations by the IMF. This pre-existing context of IMF’s report and Ethiopian economic situation on social media most likely contributed to the satire’s plausibility and subsequent misreading.

Spread across Platforms

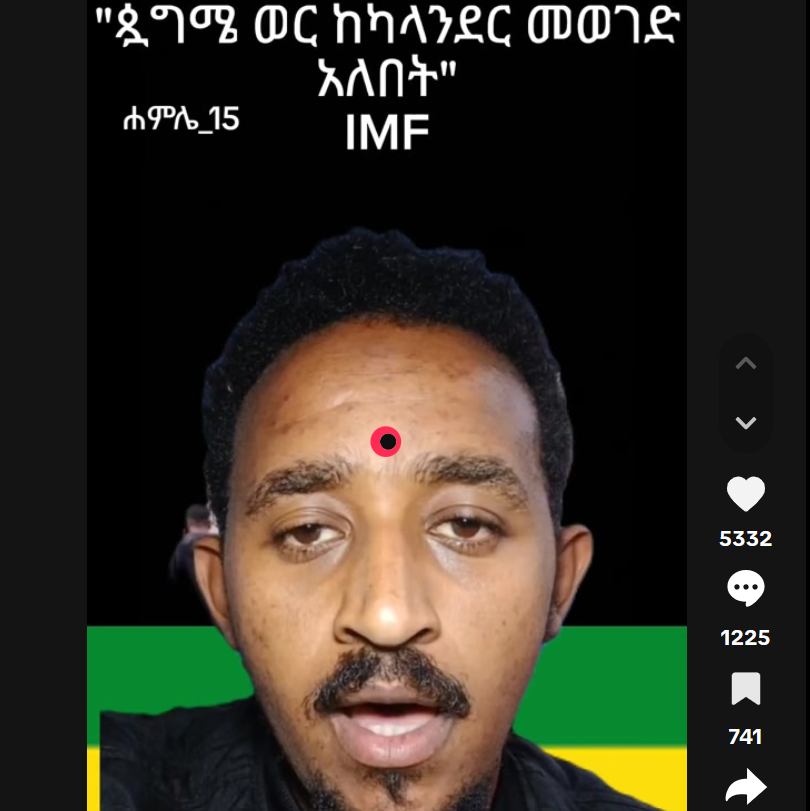

A TikTok influencer shared a screenshot with explanation as genuine news, generating over 300,000 views and 3,500 shares.

Facebook users including spiritual leaders further amplified it.

A widely viewed YouTube podcast featured scholars who entertained conspiracy theories; one guest admitted not finding the story on the IMF website or mainstream media but believed it because he saw it on local television.

Even after the author clarified it was satire, misinformation persisted as corrections on social media were sparse or delayed.

Underlying Dynamics: Why Satire Became Misinformation

This incident exemplifies several fundamental dynamics behind the viral spread of misinformation:

1. Satire pretending to be fact

Language‑based machine‑learning research shows how satire often mimics the form and tone of real news, making automated and human detection difficult. For instance, Levi et al. have demonstrated that semantic and linguistic attributes such as coherence indicators and political tone are essential for differentiating satire from misinformation; however, mainstream audiences rarely engage in this analysis. Satirical works that reference authorities, even fictitiously, manipulate journalistic norms, thereby enhancing perceived legitimacy.

2. The virality engine

Social media algorithms prioritize sensational, emotionally charged content over factual accuracy. TikTok’s short‑video format and shareability, Facebook’s network structure, and YouTube’s recommendation system amplify content that triggers reactions. Research reported by Wired demonstrates that inoculation efforts (videos warning about misinformation tropes) work, but sensational content still spreads much faster. In this case, humor about economic policy made the satirical post highly shareable across platforms.

3. Authority bias and language barriers

Despite recognizing the satire, many users deferred to perceived authority, especially when the content cited reputable institutions or used official language. This aligns with the official RAND report and its follow-up book by Kavanagh & Rich, which define “Truth Decay” as including a “declining trust in formerly respected sources of factual information.” Crucially, they emphasize that even those within established institutions may struggle to differentiate fact from opinion when presented with seemingly authoritative content. This susceptibility extends to educated individuals, who can be misled by English-language content that appears to cite credible institutions. The deliberate use of English, coupled with fabricated IMF quotes and a news-style tone, exploited authority bias, leading people to defer to perceived expertise without critical evaluation. Research further demonstrates that mere access to the internet does not inoculate against falsehoods; rather, language and framing can powerfully override critical thinking. In essence, increased internet access alone does not diminish belief in misinformation among individuals lacking critical reasoning skills; persuasive framing and presentation exert a stronger influence.

4. Cultural resonance

Pagume, the 13th month in the Ethiopian calendar, holds significant religious and cultural importance as an integral part of the Ethiopian Orthodox Church calendar. The satirical fake news incident occurred amidst pre-existing economic stress, religious sensitivities, and political tension in Ethiopia. Content that touches on such symbolic or culturally significant themes, like Pagume, can evoke strong emotional responses, making audiences less likely to scrutinize the information critically. As one source notes, disinformation rooted in religious or cultural triggers can be particularly resilient and influential, especially when intertwined with grievances or sensitive issues. This is because symbolic beliefs tend to resist contradictory evidence. However, this instance of satirical fake news differs in two crucial respects. First, while its primary objective is to entertain and ridicule, it meticulously imitates the register of genuine news, thereby increasing its potential for deception. Second, it takes the form of a complete news article, rather than a brief tweet or an isolated online comment, further enhancing its credibility and reach.

Broader Implications Ahead of 2026 Elections

As Ethiopia approaches its next national electoral cycle, the recent satire involving the viral “Pagume” satire exposes critical vulnerabilities in the country’s information ecosystem. What may seem like harmless humor can quickly become a vehicle for disinformation, particularly when it intersects with religious sensitivities, institutional trust, and elite discourse. Below are the broader implications and strategic insights that emerge:

Religious and Cultural Triggers Deepen the Impact

False narratives rooted in religious or cultural themes, such as those invoking Pagume or sacred figures, can have an outsized impact. These narratives are not only emotionally charged but also symbolically resonant, making them more durable and influential than fact-based corrections. In collectivist or religiously structured societies, rumors that connect to people’s culture can be harder to dismiss, even with facts. As electoral tensions rise, such themes may be weaponized to mobilize sectarian sentiment, erode public trust, or delegitimize state institutions. Disinformation of this nature becomes especially dangerous when it aligns with existing grievances or perceived injustice.

Paradox of English‑language parody

Satirical content crafted in English and styled to resemble institutional communication can be particularly misleading when it circulates among scholars, clergy, or public figures who may mistake it for legitimate discourse. This reflects a “literacy paradox”: higher education or fluency in English does not automatically equate to greater skepticism. Without prior exposure to disinformation tactics or parody formats, even critically trained individuals may fall prey to deceptive cues like logos, formal language, or institutional references. The problem is compounded when such figures unknowingly endorse or share the content, inadvertently amplifying its reach and credibility.

The Limits of Clarifications: Timing is Everything

Once misinformation spreads widely, even prompt corrections often fail to reverse the damage. Behavioral science shows that once a narrative embeds itself in public memory, simple fact-checks or disclaimers rarely undo belief especially when the original content is vivid or emotionally resonant. This underscores the need for proactive, pre-viral interventions. Trusted platforms and institutions should be equipped not only to debunk falsehoods but also to issue prebunking disclaimers, contextual warnings, and consistent follow-ups before content gains traction. Waiting to correct after virality sets in is often too late.

Local media validation of falsehoods

Even reputable local media can inadvertently validate false claims when editorial standards are weak or confirmation protocols are absent. In the recent case, a scholar referenced local television as a source despite no official confirmation highlighting how assumed credibility can mask misinformation. As local broadcasters and digital media outlets gain influence, their role in verifying and contextualizing content becomes even more critical. Embedding fact-checking protocols, enforcing source verification, and training both media professionals and academic commentators in information hygiene will be essential to reduce the spread of falsehoods cloaked in media legitimacy.

Conclusion

The “Pagume” satirical post is more than a social media anecdote—it is a warning signal. In environments shaped by economic stress, religious symbolism, and elite influence, satire and misinformation can quickly evolve into destabilizing forces. As Ethiopia nears a pivotal election year, this case reveals layered vulnerabilities:

- Authority bias (trust in elites or institutions),

- Cultural leverage (use of identity-based triggers),

- Platform virality (amplification without verification), and

- Symbolic endurance (resilience of emotionally loaded narratives).

To build societal resilience and protect democratic discourse, a multi-pronged strategy must be adopted:

- Media and digital literacy: Train community leaders, influencers, journalists, and educators to identify misinformation, decode satire, and verify sources especially in moments of heightened public emotion.

- Prebunking and inoculation: Launch targeted prebunking initiatives that expose manipulation tactics before users encounter them. This builds cognitive immunity and reduces the persuasive power of false content.

- Rapid response mechanisms: Empower NGOs, civil society groups, and electoral institutions with the tools to respond to viral misinformation within hours, not days, through coordinated messaging and pre-approved clarification templates.

- Editorial accountability in media: Establish verification checklists for broadcasters and podcast hosts, require on-air correction protocols, and publish transparent retraction notices when misinformation is aired.